When your site gets hacked completely replacing it might not be enough.

Your website is under attack every day. Unfortunately, everyone with a website is at risk for getting hacked.

sem[c] was brought in to help when a site that had previously been hacked and fixed but it seemed to have been hacked again. The client reported that a number of site visitors had complained that the site had infected them with malware.

There are a number of things that need to be evaluated in a situation like this. In order to evaluate it’s necessary to see the problem in action. First a reading of the on-site scan showed no evidence of a hack. This made sense since the entire site had been restored from backup and the security had been tightened months earlier. At the same time this strongly suggested that the site had NOT been hacked again.

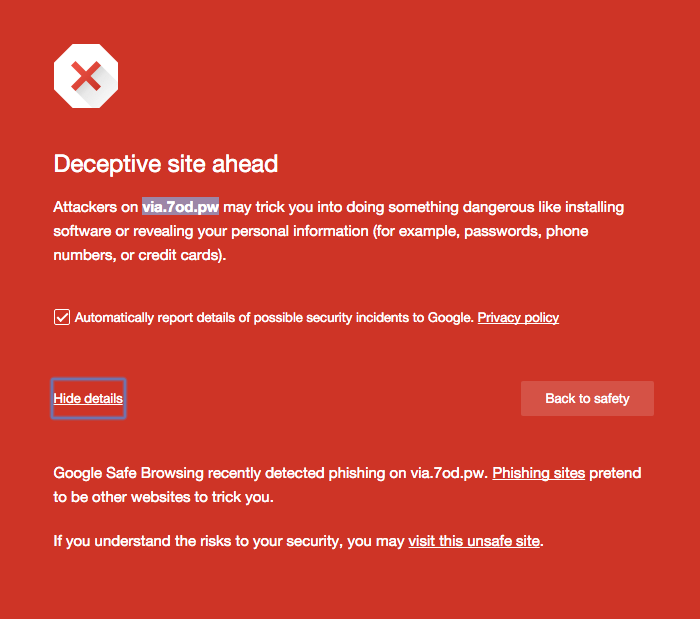

Checking the results of a Google search was the next step. When using the Chrome browser on a Mac clicking on what should have been the company’s front page brought up a full-screen red warning which informed that the link led to a site known to disseminate malware.

It is important to note that the URL mentioned in the warning (via.7od.pw) was not the company’s address but was a kind of “man-in-the-middle’ that redirected to spam sites. Tests with other browsers did not bring up the red interstitial warning page and brought up random spam pages including those attempting to install malware.

Our conclusion? Google’s index itself was corrupted. This helped to explain why Google was listing nearly twenty thousand crawl errors. It also explained why both the Google and Bing bots were constantly requesting non-existent pages from the site. It’s important to note here that it’s been long discussed that Bing uses the Google index in its algorithm.

The very negative SEO implications should be obvious here. Visitors using Google to search were at best being warned from visiting the company’s site and at worse being exposed to malware. The company website’s performance risked degradation due to the thousands of requests for non-existent page. These problems led to a very serious trust issue with the site’s visitors.

The solution was not quite so obvious. One approach would be to use webmaster tools to individually remove each of the twenty thousand spurious sites on at a time. Instead, sem[c] was able to communicate to Google that it’s index had errors and needed to be updated.